Station 2: The Structured Clinical Examination Background

The General Medical Council provide advice to medical schools regarding assessment processes in Tomorrow’s Doctors (2009)1 to ensure that all schools meet quality assurance in basic medical education. As such, the supplementary advice on assessment in undergraduate medical education 2 suggests that medical schools should use a range of assessment techniques that are valid, reliable and appropriate to the curriculum to accurately assess the competency of medical students. Most importantly, this ensures that only students who meet the outcomes of the assessment are permitted to graduate and practice as doctors3.

Many examiners may recall the traditional “long case” assessment at the end of medical school. This was typically an hour spent with a patient that was unobserved by examiners, for the student to take a history and perform a clinical examination. This was followed by a presentation of the findings to the examiners and unstructured questioning. This method was inherently unreliable and biased with an overall lack of standardisation3,4,5. The cases chosen were not necessarily of equal complexity and there was also considerable variation amongst examiners and the questions that they asked of the students4.

Many examiners may recall the traditional “long case” assessment at the end of medical school. This was typically an hour spent with a patient that was unobserved by examiners, for the student to take a history and perform a clinical examination. This was followed by a presentation of the findings to the examiners and unstructured questioning. This method was inherently unreliable and biased with an overall lack of standardisation3,4,5. The cases chosen were not necessarily of equal complexity and there was also considerable variation amongst examiners and the questions that they asked of the students4.

The first objective structured clinical examination was described by Harden and Gleeson as far back as 19793. However, since the late 1990’s, the majority of medical schools have adopted this new approach by attempting to standardise patients and mark schemes (making the assessment more objective and structured) and to include a wider variety of clinical tasks in order to increase reliability and validity of exit examinations – ensuring that students are competent to graduate.

What is an Objective Structured Clinical Examination (OSCE)?

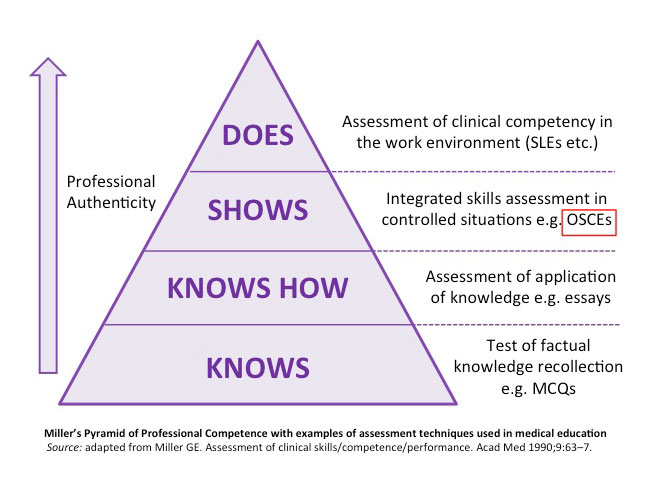

Despite the differing terminology amongst medical schools, all of the assessments described below are designed to quantitatively assess students’ clinical competency at the bedside, including stationsthat will replicate what the students will be doing when they enter clinical practice3. They assess the “show’s how” level of Miller’s pyramid.

Despite the differing terminology amongst medical schools, all of the assessments described below are designed to quantitatively assess students’ clinical competency at the bedside, including stationsthat will replicate what the students will be doing when they enter clinical practice3. They assess the “show’s how” level of Miller’s pyramid.

Over the years, OSCE stations have evolved to assess more than basic clinical skills, assessing more complex patient presentations, interprofessional relationships and aspects of professionalism, representing real-life clinical situations and increasing the validity of the assessment5,10. At different medical schools, the clinical examinations are termed slightly differently; for example:

- OSCE: Objective structured Clinical Examination (the commonest form of clinical assessment at Medical School)

- OSSE: Objective Structured Skills Examination

- OSATS: Objective Structured Assessment of Technical Skills

- OSLER: Objective Structured Long Examination Record

- PACES: Practical Assessment of Clinical Examination Skills

Each of these assessments include:

- Multiple clinical encounters

- A variety of clinical tasks blueprinted to the medical school’s curriculum

- Multiple examiners

- Standardised mark sheets

The use of highly structured mark sheets allows for stations to be marked by a number of different people, on different sites and on different days: this reduces the individual assessor bias and makes the assessment reproducible. An increased number of stations also increases the reliability of the assessment. Each medical school will calculate the reliability of their own assessment.

Despite its popularity, the structured clinical assessment is still not a “perfect assessment”, as it doesn’t assess the full aspect of the candidates’ competency. There are concerns raised regarding the time required in each station to assess each competency as well as the negative impact that learning for an assessment can have on students. Postgraduate examinations have adopted the carousel-method of structured clinical examinations. For example, The Royal College of Physicians has introduced the Practical Assessment of Clinical Examination Skills (PACES) examination. This assessment, first introduced in 2001, consists of five discreet stations which are assessed by two independent examiners at each station. This assessment tests examination skills, communication skills and history taking skills in longer stations of 15-20 minutes.

All the structured clinical assessments have many similarities and have evidence that they are useful tests of clinical competency however, most medical schools have multiple methods of assessment aimed at judging a candidates' performance over the entire course (including workplace assessment, written assessments, online assessments, etc.)

How does a structured clinical examination run?

The examination is arranged as a series of sequential, task-orientated stations which are blueprinted to the medical school curriculum in order to test a wide variety of clinical, technical and practical skills3,5,6. To ensure consistency, the students will all sit the same assessment, in the same amount of time with the same structured mark sheet (which tends to be electronically read) which helps to reduce examiner bias and maintain exam reliability.

The examinations can even be on different sites and on a number of days in order to accommodate the large number of students who need to be examined, which is why examiner’s marking in the same way is of huge importance to ensure the reliability of the assessment6. Each task will be broken down into a series of components and the examiner will be asked to assess the candidate’s ability to perform each component of that task.

In order that the examination simulates real practice, a mix of simulated patients and real patients is often used. Real patients have the advantage of having stable clinical signs for students to elicit, and allow students to demonstrate their doctor-patient interaction. Simulated patients will have clear guidance regarding their role and often have a patient narrative from which their responses can be established7.

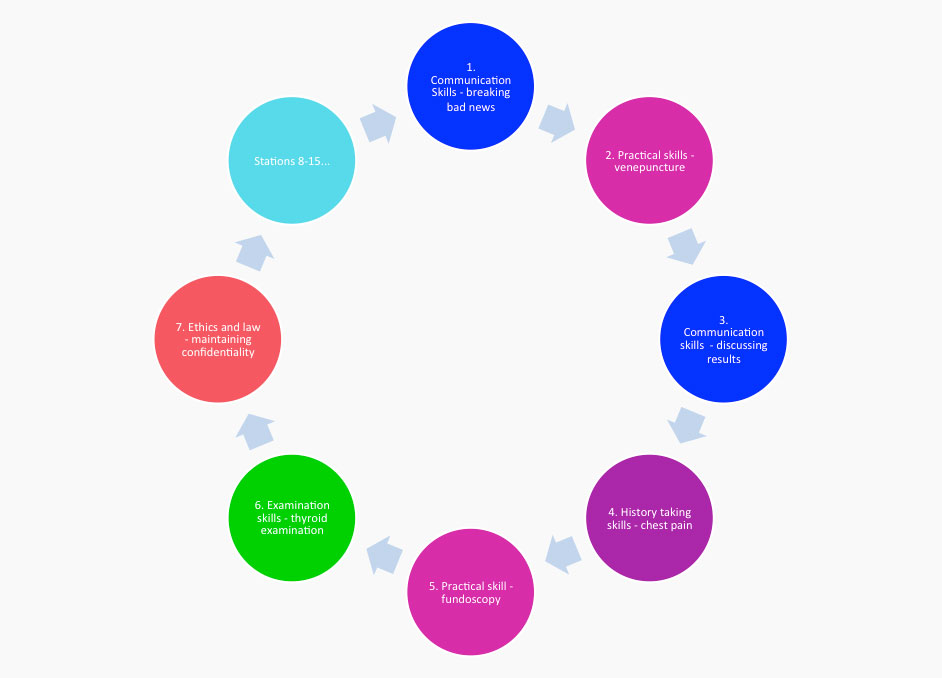

An example of an structured clinical examination circuit is shown on the right. This circuit includes assessment of communication skills (in blue), practical skills (in pink), history taking skills (in purple), core examination skills (in green) and ethics and law (in red). (Adapted from Gormley G. 2011 Summative OSCEs in Undergraduate Medical Education. Ulster medical J; 80(3) 127-1326)

Why do we have a structured clinical examination?

The General Medical Council (GMC) recommends that students are able to demonstrate their acquisition of knowledge, skills and behaviours as outlined within their medical school curriculum, which mirrors the recommendations in Tomorrow’s Doctors (2009).This method of assessment was created to ensure that students have been found to meet the minimum standards required for being a foundation doctor and that they will be competent to perform on the ward on their first day.

Potentially, patients could be placed at risk if a candidate is considered to be competent but has not met a satisfactory standard of practice.

Sequential Testing in structured clinical examinations

What is Sequential Testing for structured clinical examinations?

In sequential testing following an initial structured clinical examination circuit, a group of students who have attained a mark that allows the testing agency to state with a high degree of confidence that the students have passed and require no additional testing is identified. In identifying this group, by default, a second group is also identified for which further testing is required. This is based on the premise that there will always be a certain degree of error with any test, particularly those assessing clinical skills. Sequential testing seeks to minimise the risk of passing students who should not have passed, and failing students who should not have failed, by conducting additional testing. This extra ‘sampling’ provides more confidence about pass/fail decisions on the group of candidates identified as having failed or considered to be in the borderline category 9.

Why is this beneficial?

One obvious benefit of sequential testing is that it allows further sampling of the performance of the group about whom testing agencies are most concerned; those who clustered around the passing and failing point. Essentially, providing these students with a longer structured clinical examination which increases the reliability of decision making. The sequential test (second circuit) takes place without a period of remediation and no feedback about performance provided in the intermediate period.

What is the Structure of Sequential Testing?

One example is where all students sit a 10 station structured clinical examination (Exemption Structured Clinical Examination). Borderline regression is used to set the cut mark. Those who are above the BRM cut-mark plus two standard error of measurement and who have no more than one flag for professionalism are classed as exempt from further testing. The rest of the cohort are notified around one week after the initial circuit. This allows time for the examinations office to quality control the initial circuit and perform the relevant psychometric. They then sit a second ten stage circuit (Confirmation Structured Clinical Examination) two or three days after notification of this requirement.

For this group of students, the two circuits are combined to constitute a 20 station examination. Cut mark again uses BRM but this time with the addition of 1 SEM due to the improved reliability. The alpha for the twenty stations is calculated using a D-analysis based on the alpha for the first circuit. This is due to the cohort taking the Confirmation circuit being a skewed sample of the entire cohort and the pass threshold being linked to whole cohort performance.

Other contemporaneous clinical examination issues

All of the following issues vary across Medical School sites and should be clarified before starting the exam6.

- Patient ratings

Some schools ask examiners to record patient or role-player ratings on the marking sheets. A checklist will be provided to help with patient or actor scoring. - Quarantining of candidates.

Where exams span a whole day with several separate sittings, the students may be quarantined to prevent them from communicating with each other about the content of the exam. - Serious concern reporting

Many schools have adopted a separate sheet for examiners to report serious concerns over a student’s professional behaviour. Poor performance will be accounted for by a low score on the mark sheet however if there are concerns over the student’s interaction with the patient or concerns over clinical safety, then this may be reported separately and could have consequences on whether the student passes their exam, independent of their score. - Examining on stations outside of your specialty

On the whole, it is recommended that examiners in final year examinations should examine on stations outside of their specialty. Even with adequate training, there is a tendency for examiners who assess students on stations relating to their specialty (e.g. a cardiologist examining on a cardiovascular examination station) will have higher expectations regarding examination content and technique that may result in them marking more harshly. - “Killer stations”

All medical schools agree that there shouldn’t be any stations within a structured clinical examination that have to be passed in order to pass the entire examination. These types of stations are an unfair assessment of the student’s overall ability to perform as a competent doctor as in reality, doctors are able to seek help from colleagues and should be able to recognise the limits of their ability. - Feedback on candidate performance

Most schools will ask you to provide some written feedback on each candidate’s performance to help justify your score or to help with the candidate’s future development. It is worth finding out if your feedback is to be directly copied to the candidate before you start marking.

References:

- Tomorrow’s Doctors (2009). General Medical Council, London.

- General Medical Council Assessment in Medical Education (Supplement to Tomorrow’s Doctors 2009) (accessed 26th April 2015)

- Harden R. M. & Gleeson F.A., 1979 Assessment of clinical competence using an objective structured clinical examination (OSCE). Medical Education; 13(1): 41-54

- Low-Beer N. Lupton M, Warner J, Booton P, Blair M, Almarez Serrano A, Higham J, Adapting and implementing PACES as a tool for undergraduate assessment. The Clinical Teacher 2008; 5:239-244

- Boursicot K. et al 2011 Performance in Assessment: Consensus statement and recommendations from the Ottawa Conference. Medical teacher; 33(5): 370-383

- Norcini. J. 2001 The validity of long cases. Medical education 17(3): 165-1712001

- Gormley G, Sterling M, Menary A, McKeown G. Keeping it real! Enhancing realism in standardised patient OSCE stations. The Clinical Teacher 2012; 9: 382-386

- Gormley G. 2011 Summative OSCEs in Undergraduate Medical Education. Ulster medical J; 80(3) 127-132

- Pell G, Fuller R, Homer M, Roberts T, 2013 Advancing the objective structured clinical examination: sequential testing in theory and practice. Medical Education June; 47(6): 569-77

- Newble D., 2004 Techniques for measuring clinical competence: objective structured clinical examinations. Medical Education 38(2): 199-232